Is dbt the right ELT tool for you? 10 important considerations at a glance

In today’s data-driven world, choosing the right ELT-tool to manage data transformation is a key decision for many organizations. dbt (data build tool) has gained popularity - but is it the right fit for your company? This article will guide you through 10 important aspects to consider when evaluating whether dbt aligns with your needs and infrastructure.

dbt is developed and maintained by dbt labs. It helps companies transform raw data into usable insights by managing and transforming data within data warehouses. It allows data engineers and analysts to write transformations in SQL, organize their workflows, and test their models. Rather than manually running SQL queries on a data warehouse, dbt automates this process, making it easier for teams to standardize, test, and deploy data transformations. For a deeper understanding please refer to the documentation [1]. Now let us look into 10 aspects in depth that may be important for a decision:

1. Open-Source

At its core, dbt is an open-source tool under the Apache License 2.0, that allows commercial use which means companies can use it without having to pay for expensive software licenses. This is a major advantage, especially for small and medium-sized businesses that need powerful data transformation capabilities but have limited budgets for commercial tools. dbt Core is a command line tool.

dbt Labs also offers dbt Cloud, the paid and fully managed SaaS version of dbt. It offers a web interface and features like Job scheduling, Web-Based User Interface, Integrated Version Control. Depending on your needs and budget though, these features may easily be substituted with a combination of existing tools, cloud services, and third-party platforms that your company may already have in use.

2. Data Warehouse Compatibility

Instead of requiring companies to purchase or maintain new infrastructure, dbt leverages existing data warehouses. Using platforms such as Snowflake, BigQuery, Redshift or Databricks dbt can work directly within your company’s data warehouse. To check all the trusted adapters in dbt check source [2], there are also community adapters [3] if yours is not the first group there may still be a possibility. By using the scalable compute resources of cloud data warehouses, dbt avoids the need for additional servers or expensive hardware investments.

If a company wants or needs to handle infrastructure components themselves, dbt can also be used on-premises. In this case, the company is responsible for managing the hardware, scaling, and infrastructure maintenance, which may be wanted or important depending on specific requirements.

3. Automation and Quality Insurance

Automation reduces the need for manual interventions and ensures efficient workflows, which can save companies both time and money by reducing the need for a large data engineering team to maintain the data pipeline. dbt automates many data engineering tasks, such as managing dependencies, version control and testing.

Its built-in testing helps catch issues early in the transformation process. This prevents expensive data errors from going unnoticed and affecting downstream analytics or reporting systems. Early detection of data issues means fewer disruptions, less rework, and therefore lower operational costs.

version: 2

models:

- name: customer

description: contains information about customers

columns:

- name: id_customer

description: customer id, unique identifier

data_tests:

- unique

- not_null1 - YAML File configuring automated tests for column `customer id`

4. Developer Efficiency

There are two main components to possibly increase efficiency.

- Firstly, one of dbt`s strengths is that it simplifies data transformations for teams that are already familiar with SQL and people without a strong programming background. For the former meaning there’s no need to learn a new language, many in your team may already be familiar with SQL since it is a widespread language - for the latter the entry barrier is rather low.

- Secondly, dbt`s modular structure allows users to reuse code, reduce duplication, and organize their transformation logic efficiently. It does this by integrating jinja templating to introduce logic, variables, and dynamic behavior. Developers can deliver data projects faster and with fewer resources when they can fall back on modular code they created earlier.

5. Version Control and Collaboration

dbt integrates seamlessly with Git, enabling teams to track changes, collaborate, and roll back updates of your code if needed. Version control and collaborative features prevent costly mistakes in data transformations and reduce the risks associated with data pipeline changes. Fewer errors and rework mean lower costs for companies. Note that dbt itself does not store your data, it only versions the transformation code for your data via git. However, Git integration also adds complexity, and your team must be comfortable with version control workflows.

6. Scalability

dbt’s ability to scale with cloud data warehouses means that companies don’t have to worry about outgrowing their data transformation tool. dbt grows with your business, allowing you to process increasing volumes of data without additional complexity or cost. This scalability makes dbt highly cost-efficient for both small startups and large enterprises.

7. Documentation

To provide an interactive and up-to-date view of your data transformations, dbt automatically generates comprehensive documentation for your data models. Running the dbt docs generate command creates a detailed HTML page containing information about your models, their relationships, and metadata.

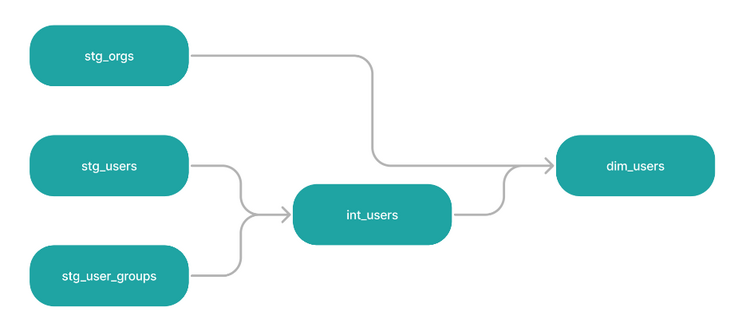

It visualizes your data lineage as a Directed Acyclic Graph (DAG), showing the flow and dependencies between models, which helps teams understand and manage their data pipelines effectively.

dbt’s documentation and tests are based on YAML files (see code example above), ensuring a structured approach to maintaining data integrity and clarity. dbt’s tools might help, but they won’t replace the need for strong documentation practices – the descriptions must be maintained by the team.

8. Community Support and Ecosystem

The open-source nature of dbt means there is a large, active community of users contributing plugins, tutorials, and resources, like the community adapters mentioned earlier. Companies can benefit from this ecosystem without paying for proprietary support or solutions. However, if users opt for dbt Core, they should be aware that they will still need to consider their own orchestration and scheduling solutions to manage their workflows effectively. Moreover, dbt Labs offers paid services like dbt Cloud for those who want additional features, including built-in orchestration, but the core tool remains free, keeping the entry barrier low.

9. Costs

As cost is often one of the main factors in deciding for or against a tool, I'd like to summarize the ways in which dbt can affect your costs as an organization. dbt's open source foundation, its ability to leverage existing data infrastructure, the automation of repetitive tasks, and features that improve both efficiency and data quality all affect costs. These factors reduce the need for expensive infrastructure, large teams and lengthy troubleshooting, making dbt an attractive option for companies looking to scale their data operations without incurring high costs.

10. Outlook

Lastly it is important to consider where a tool is headed. Both dbt Core as a free open-source tool and dbt Cloud as a paid version will continue to be maintained and expanded according to CEO Tristan Handy [4].

For dbt Cloud, there are some interesting new features [5] available as a beta version.

- The dbt Assist, a co-pilot feature that helps automate development.

- The Compare Changes feature highlights differences between production and pull request changes during CI jobs, ensuring accurate and efficient deployments.

- And the new low-code visual editor with a drag-and-drop interface, which I think is particularly important because it makes it easier for people from different departments in a company, who do not have a programming background, to work with dbt.

Conclusion

Ultimately, whether dbt is a fit for your company will depend on your existing infrastructure, team expertise, and growth plans. There are also some anti-patterns like real-time data processing or complex multi-language transformations - dbt shines for SQL-based, batch data transformations within cloud data warehouses.

Is your company starting its data infrastructure from scratch? dbt can be a foundational tool that simplifies and accelerates the entire data transformation process from day one especially if you are looking for a cloud-native setup. Your company has a smaller budget? You can take advantage of the open-source nature of dbt Core. Does your organization already have a mature data infrastructure? Benefit from dbt if your existing infrastructure relies heavily on manual data transformation or custom-built ELT-pipelines. dbt`s automation will be of profit. Depending on your cloud-based warehouse, there is a good chance dbt will integrate seamlessly. Lastly - does your staff have a high level of SQL expertise? dbt is a fit.

Source

[1] https://docs.getdbt.com/docs/introduction

[2] https://docs.getdbt.com/docs/trusted-adapters

[3] https://docs.getdbt.com/docs/community-adapters

[4] https://www.youtube.com/watch?v=lNZLcsHAdco&t=637s

[5] https://www.getdbt.com/blog/dbt-cloud-launch-showcase-2024