Kafka vs. Kinesis: Which Solution is Right for Me? A Comparison Using Sensor Data.

If you're dealing with the processing of large data streams, you'll eventually come across the comparison between Apache Kafka and AWS Kinesis. But which system is the right fit for which use case? The following article aims to shed light on this question.

In a Nutshell

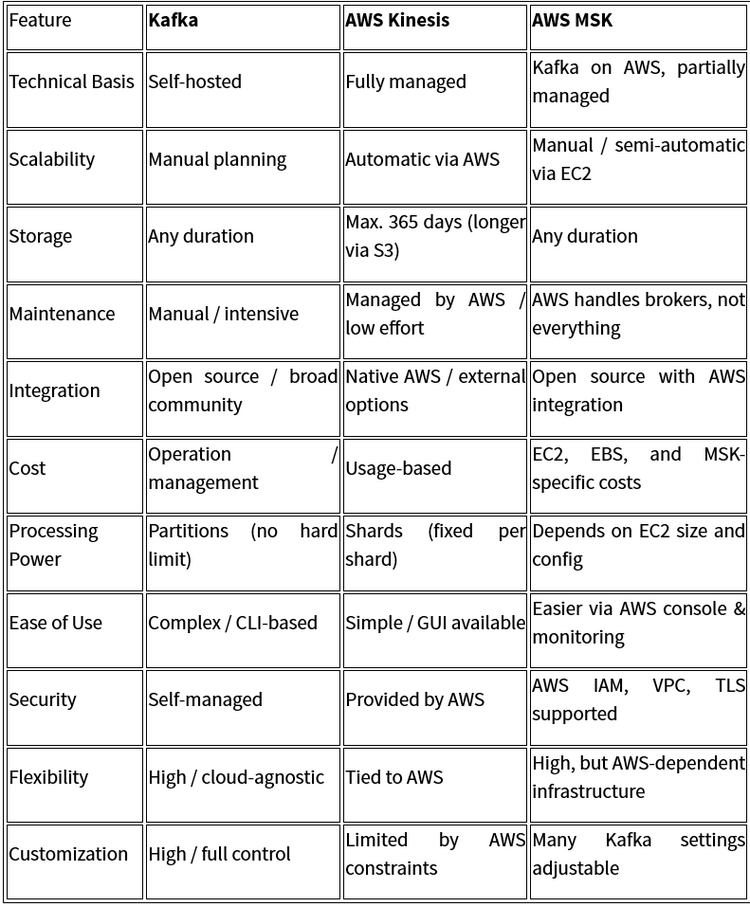

This article compares the two streaming services Apache Kafka and AWS Kinesis in terms of architecture, scalability, management, and typical use cases such as processing sensor data. Both systems enable reliable real-time transmission of large volumes of data but differ in operation and control.

Kafka, as an open-source solution, offers high flexibility and is cloud-agnostic, but it requires deeper technical knowledge for self-hosting. Kinesis, on the other hand, scores with its ease of use and seamless AWS integration but is primarily suited for AWS-centric infrastructures. With AWS MSK, there's also a hybrid solution that embeds Kafka as a managed service within AWS environments, providing a middle ground between self-hosting and fully managed services.

Use Case: Sensor Data

A classic example is the processing of high-volume sensor data. For instance, if you're measuring temperature, you need current readings — not those from 20 minutes ago — and you shouldn't have to wait 20 minutes for the next update. A sensor that only delivers data every 20 minutes would be unsuitable for an oven that needs to shut off upon reaching a target temperature — otherwise, your cake may be ruined.

In many use cases, historical data is secondary, although there's always the option to forward values to long-term storage. Other possible applications include real-time dashboards or log data analysis.

Both tools fundamentally serve the same purpose but differ in details. This article explores how both work and clarifies which technology is suitable for which scenario. It also looks at how AWS MSK and Kafka are connected and why MSK can be a good architectural complement to an open-source Kafka solution.

Purpose

Both services are used for real-time data transmission (streaming) and are designed to handle large volumes of data in real time. Partitioning enables scalability. Under high load, new partitions (Kafka) or shards (Kinesis) can be quickly added. Scaling down is also possible but requires planning and adjustments.

These systems often help decouple data ingestion from data processing. If data is ingested and processed by the same system, processing issues can lead to delays or data loss. A temperature sensor typically only delivers current values — missed data won't be resent. If data isn’t processed and stored by the receiving system, it’s usually lost permanently.

Kafka vs. Kinesis Comparison

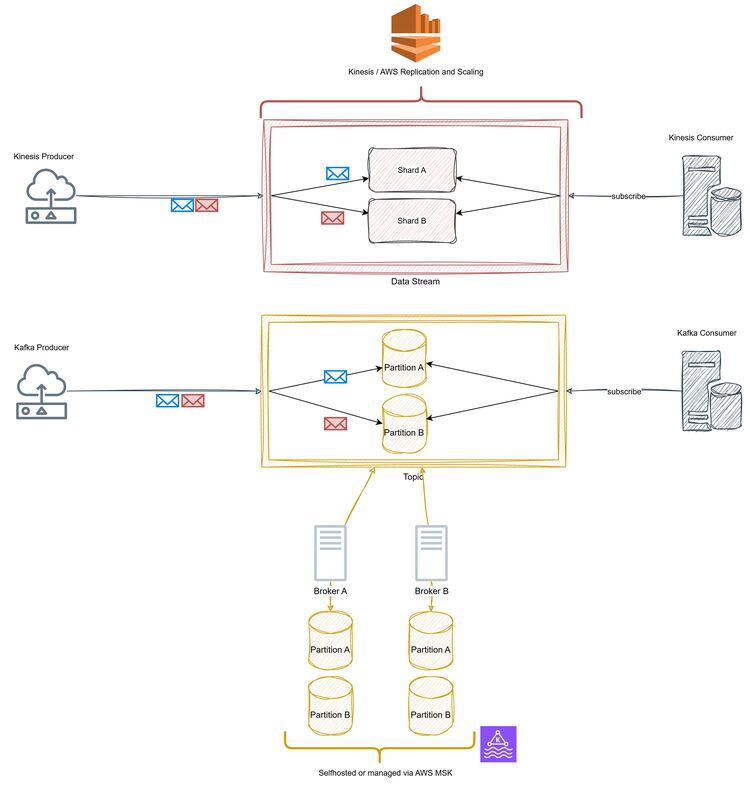

Architecture Overview

Kafka is an open-source system using topics, partitions, and brokers for management and replication.

Kinesis is a fully managed AWS service using streams and shards.

Similarities:

- Data flow: Producer → Stream → Consumer

- Horizontal scalability via partitions (Kafka) or shards (Kinesis)

- Temporary storage allows decoupled data processing

Kinesis

Kinesis is a fully managed streaming service by AWS. AWS handles infrastructure, scaling, and availability, so there's no self-managed setup required. Because of its deep integration with AWS, Kinesis is ideal for users who want to get started quickly with minimal management overhead.

Core components:

- Producer

- Consumer

- Stream

- Shard

Kafka

Kafka is an open-source project from the Apache Software Foundation. It can be self-hosted or used via services like AWS MSK. Self-hosting requires in-depth knowledge, especially for scaling, monitoring, and security. If less effort is desired, managed services like MSK are a good alternative.

Core components:

- Producer

- Consumer

- Topic

- Partition

- Broker

MSK as a Bridge Between Self-Hosting and Managed Service

For those who don't want to manage Kafka themselves, AWS MSK (Managed Streaming for Kafka) offers a viable alternative. MSK handles many tasks like infrastructure management, auto-scaling, and security features (e.g., integration with AWS IAM), making it easier to integrate Kafka into AWS projects.

This significantly reduces operational overhead — comparable to Kinesis — while retaining the flexibility of Kafka. However, self-hosting Kafka demands deep expertise and high administrative effort, particularly in scaling, fault tolerance, and security.

Components Breakdown

Kinesis and Kafka work similarly at a high level. Both use a central data store — “Data Stream” in Kinesis, “Topic” in Kafka. For scalability, storage is divided into smaller units: Shards (Kinesis) and Partitions (Kafka).

Kafka also has Brokers — servers that store data redundantly to ensure fault tolerance. Kinesis lacks a direct equivalent because AWS automatically handles replication and availability.

The core principle is the same: APIs allow you to develop your own producers (send data) and consumers (receive data), supported by various libraries. Data transfer between producers and consumers is managed by the system itself.

Conclusion

Both systems serve the same purpose: reliable, scalable real-time transmission of large data volumes. Kafka shines with its flexibility and rich open-source ecosystem, while Kinesis stands out for its simplicity and deep AWS integration.

The choice depends on whether you prioritize maximum control or minimal operational overhead. It's also key to consider whether you need multi-cloud support. Kafka is suitable for cloud-agnostic setups, while Kinesis is ideal for fully AWS-based infrastructures.

Quellen

[1] https://kafka.apache.org/intro

[2] https://aws.amazon.com/de/kinesis/data-streams/faqs/

[3] https://medium.com/swlh/apache-kafka-what-is-and-how-it-works-e176ab31fcd5

[4] https://docs.aws.amazon.com/msk/latest/developerguide/what-is-msk.html

[5] https://kafka.apache.org/documentation/

[6] https://youtu.be/UbNoL5tJEjc?feature=shared

[7] https://youtu.be/B5j3uNBH8X4?feature=shared

[8] https://docs.aws.amazon.com/msk/latest/developerguide/msk-cluster-management.html